Theory Chapter 8:

Mixed Strategies

Note to the Teacher: ....................

1. Mixed Strategies

We have seen that Nash equilibria are likely outcomes of games.

But what happens in games without Nash equilibrium in pure strategies? Even very

simple games like ROCK-SCISSORS-PAPER do not have any such Nash equilibrium.

Whereas in games with pure Nash equilibria players have even an interest of communicating

their strategy to the other player before the game,

in ROCK-SCISSORS-PAPER it is crucial to leave your opponent in the dark about

what you are planning. You want to surprise your opponent, and such a surprise

is best achieved by surprising yourself. This could be done by delegating

the decision about your strategy to a random device.

This is essentially the idea of a mixed strategy.

An example for a mixed strategy in

ROCK-SCISSORS-PAPER is to play "rock", "scissors", or "paper" with probabilities

50%, 25%, or 25%, respectively.

Before the game is played, the player decides randomly, based on these probabilities which

pure strategy to use. Pure strategies can also be viewed as mixed strategies where one

strategy is chosen with probability 100% and the others with probabilities 0%.

If we add the above mentioned mixed strategy (50% rock, 25% scissors, 25% paper)

as an option for the first player

in ROCK-SCISSORS-PAPER, then the expected payoffs for player 1 against player 2's

pure strategies Rock, Scissors, Paper, are 0, 0.25, -0.25 respectively.

| Rock | Scissors | Paper |

| Rock | 0 | 1 | -1 |

| Scissors | -1 | 0 | 1 |

| Paper | 1 | -1 | 0 |

| 50-25-25 mix | 0 | 0.25 | -0.25 |

If the first player, Ann, plays the mixed strategy and Beth plays Rock,

then with 50% probability there will be a tie Rock versus Rock, with 25%

probability Beth will win (Beth's Rock against Ann's Scissors), and with 25%

probability Ann will show Paper and win against Beth's Rock.

Thus the expected payoff for Ann when playing this mixed strategy against

Beth's Rock equals 50%·0 + 25%·(-1) + 25%·1 = 0.

Thus the values in the fourth row are expected values of the corresponding values in

the other rows and same column,

using the probabilities of the mix. For instance, the second value in the fourth row is

50%·1 + 25%·0 + 25%·(-1) = 0.25,

and the third one

50%·(-1) + 25%·1 + 25%·0 = -0.25.

Even though this new mixed strategy doesn't dominate any of the pure strategies,

the newly added mixed strategy may be attractive to

a player aiming at the maximin strategy since it guarantees a payoff

of -0.25 compared to -1 in the other three cases.

Of course, the other player Beth is also entitled to mixed strategies. We assume that Beth

chooses a mix of 25% rock, 50% scissors, 25% paper. Inserting this

25-50-25 mix as another one of Beth's options, we obtain the following bimatrix:

| Rock | Scissors | Paper | 25-50-25 Mix |

| Rock | 0 | 1 | -1 | 0.25 |

| Scissors | -1 | 0 | 1 | 0 |

| Paper | 1 | -1 | 0 | -0.25 |

| 50-25-25 mix | 0 | 0.25 | -0.25 | 0.0625 |

The new values are computed as before, as expected values, using the payoffs of the same row,

weighted by the probability of the mix. For instance, the last entry in the first row is computed as

25%·0 + 50%·1 + 25%·(-1) = 0.25.

Even the payoff for 50-25-25 mix against 25-50-25 mix is computed this way, using the fourth row values,

as 25%·0 + 50%·0.25 + 25%·(-0.25) = 0.0625.

Maybe not too surprisingly,

Ann's mix, with its emphasis on rock, beats the Beth's mix, that is heavy on scissors.

1.1 Best Response

Class Activity:

Find a good response to Jane

playing the 50% rock, 25% scissors, 25% paper strategy in

this applet. Play again

until you have won 3 times more than Jane. I know you can do it.

As can be seen in the matrix above, "Paper" is better

against Ann's 50-25-25 mix than "Rock" or "Scissors", since it gives an expected payoff of 0.25 for Beth.

The opponent emphasizes "Rock", so you must emphasize the move beating "Rock",

which is "Paper".

Of course we could also look for best responses among the mixed strategies, but why would

we mix strategies of different value---we would just dilute the best response.

This is also true in general. Since the payoffs of a mixed strategies are weighted averages

of the payoffs for the pure strategies, the maximum payoff is always achieved at a pure strategy.

Fact: Among the best responses to mixed strategies of the other players is always also

a pure strategy.

The mixed strategies that are best responses are just all combinations

of the best response pure strategies. To formulate this, the notion of support

may be useful---the support of a mixed strategy are all pure strategies that occur with nonzero probability.

Indifference Theorem:

A mixed strategy of Ann is a best response to mixed strategies of the other players

if each pure strategy in its support

is also a best response to to the given mixed strategies of the other players.

So do we even need these fancy mixed strategies, if we can always react with old-fashioned

pure strategies? This is discussed next.

1.2 Brown's fictitious play

Assume a game is repeated over and over. How would we find out what mixed strategy

the other player is using? He or she may not tell us.

Well, you may want to observe very closely what the

other player is doing, and react accordingly.

You may want to always play the best response pure strategy to the

observed mixed strategy played by the other player.

However, the other player may observe you as well, observe your strategy as

a mixed strategy, and react accordingly. That means, you will adapt your play

according to the play of the other player, and the other player adapts

his or her play according to your observed play.

Even though both players think they are always playing a pure strategy,

it looks as if they would play mixed strategies, since their pure strategies

are changing over time. In their histories, they play the

different pure strategies with some relative frequencies, which are perceived as

probabilities by the other player.

These relative frequencies do change over time,

but maybe eventually less and less---they might converge.

This idea has been formulated by G.W. Brown [Brown 51].

He proposed that for two-person zero-sum games such a process

will eventually converge and that these resulting

mixed strategies are both best responses to each other.

This process can be simulated in the following Excel sheet.

In ROCK-SCISSORS-PAPER, the resulting mixes of the histories are 1/3 Rock,

1/3 Scissors, and 1/3 Paper.

The following example illustrates that the pure strategies do not always occur with

equal frequency:

CRASH ROCK-SCISSORS-PAPER is a simultaneous zero-sum game played by two

players. Each one has three moves, "Rock", "Scissors", and "Paper". "Scissors" wins against

"Paper", "Paper" wins against "Rock", but "Rock" wins big against "Scissors", crashing it

with a loud sound, and giving a double payoff to the winner. It is ROCK-SCISSORS-PAPER

with this modified payoff matrix:

| Rock | Scissors | Paper |

| Rock | 0 | 2 | -1 |

| Scissors | -2 | 0 | 1 |

| Paper | 1 | -1 | 0 |

Try the matrix in the

same Excel sheet.

Both players will eventually play the 25%-25%-50% mix. Although "Scissors"

seems to be the weakest move in this modified game, it is played more often than the other two moves.

To give another example, this time for a non-simultaneous game, let us look at the normal form

of VNM POKER(2,4,2,3) discussed in the previous two chapters. After eliminating weakly dominated

strategies, we get the following matrix:

Running it through our

Excel sheet, we get a mix of

1/3 "CR" and 2/3 "RR" for Ann, and 2/3 "FC" and 1/3 "CC" for Beth.

Note that these mixed strategies translate into Ann raising with

probability 2/3 when holding a low-value card, and always raising when holding a high-value card,

whereas Beth would call with probability 1/3 when holding a low-value card, and always when holding a

high-value card. The expected payoff for Ann when both play their mix equals 2/21.

Brown's fictitious play is important even if you play the same

opponent only once. In a sense, both players would simulate thousands of

rounds in their heads, and arrive at the mixed strategies.

That's where the word "fictitious" comes from.

Even though, after this one game, nobody can observe your

probabilities by which you have chosen the pure strategies, it is important to have them

and to select your move accordingly.

1.3 Mixed Maximin Strategy, Mixed Security Level, and Linear Programs

Up to now, the maximin strategy was supposed to be a pure strategy.

From now on we also allow mixed strategies.

First note that for every mixed strategy of Ann,

Ann's worst outcome is always achieved at a pure strategy of Beth.

The reasoning is the same as for the fact mentioned above

that among the best responses, there is always a pure strategy one.

Ann's payoff for any mixed strategy of Beth is just a weighted average of Ann's

payoffs for the different pure strategies of Beth,

and such a weighted average can not be less than the smallest

value.

For every one of the infinitely many mixed strategies of Ann, we next create the row

of the payoffs versus the finitely many pure strategies of Beth.

The mixed maximin strategy is the mixed strategy

with the highest lowest entry in the row---it is the mixed strategy that guarantees

the highest expected payoff, which we may call the

mixed security level for Ann.

Take ROCK-SCISSORS-PAPER2 as example,

where whenever Ann plays scissors the bid is doubled.

The Payoff matrix of this zero-sum game is as follows:

| BRock | BScissors | BPaper |

| ARock | 0 | 1 | -1 |

| AScissors | -2 | 0 | 2 |

| APaper | 1 | -1 | 0 |

We give a few rows. The formula for calculating the entries in a row is given in the last row.

| BRock | BScissors | BPaper |

| ARock | 0 | 1 | -1 |

| AScissors | -2 | 0 | 2 |

| APaper | 1 | -1 | 0 |

| 1/3-1/3-1/3-mix |

-1/3 | 0 | 1/3 |

| 1/4-1/4-1/2-mix |

0 | -1/4 | 1/4 |

| 2/5-1/5-2/5-mix |

0 | 0 | 0 |

| p1/p2/p3-mix |

p1·0 + p2·(-2) + p3·1 |

p1·1 + p2·0 + p3·(-1) |

p1·(-1) + p2·2 + p3·0 |

The lowest entries in the first six rows are -1, -2, -1, -1/3, -1/4, and 0.

So, among these six pure and mixed strategies, the one with the highest guarantee for Ann

is the 2/5-1/5-2/5-mix. But keep in mind that there are infinitely many rows,

there might be rows with better guarantees coming. Or not?

Let's now move to a general 3 × 3 bimatrix.

| Left | Middle | Right |

| Up | A1,1, B1,1 | A1,2, B1,2 | A1,3, B1,3 |

| Middle | A2,1, B2,1 | A2,2, B2,2 | A2,3, B2,3 |

| Down | A3,1, B3,1 | A3,2, B3,2 | A3,3, B3,3 |

Ann's task is to maximize the minimum of the three values

p1·A1,1 + p2·A2,1 + p3·A3,1,

p1·A1,2 + p2·A2,2 + p3·A3,2, and

p1·A1,3 + p2·A2,3 + p3·A3,3,

by choosing her three probabilities

p1, p2, and p3

(non-negative, with the sum of the three probabilities equal to 1).

We could reformulate this by saying that

- Ann has to choose four values, v and p1, p2, and p3,

- obeying

- p1 ≥ 0,

- p2 ≥ 0,

- p3 ≥ 0,

- p1+p2+p3=1,

- v ≥ p1·A1,1 + p2·A2,1 + p3·A3,1,

- v ≥ p1·A1,2 + p2·A2,2 + p3·A3,2, and

- v ≥ p1·A1,3 + p2·A2,3 + p3·A3,3,

- such that v is maximized under these restrictions.

This is a so-called linear program.

Using methods from this rather sophisticated topic,

(which will not be covered in this book)

one finds that in the example ROCK-SCISSORS-PAPER2 above, p1=2/5,

p2=1/5, p3=2/5 is indeed the only mixed maximin strategy, with

mixed security level of 0.

For Beth, the mixed maximin strategy is

q1=1/3,

q2=1/3, q3=1/3.

1.3 Punishment Value

Let's go back to maximin value and strategy.

Ann will play her maximin strategy if she assumes that all other players

would always find moves that would minimize Ann's payoff for her move chosen.

Of course, such fear is a little paranoid for two reasons: First, why would the opponents know

what move Ann choses, after all the game is simultaneous, and secondly, why would

they even bother, usually they want to maximize their payoff, not minimize

Ann's (although in two-person zero-sum games this is the same). Now if

if the other players

really would want to punish Ann, they would select moves such that, no matter what

Ann choses, she still can get only limited payoff. For each move combination

of all players except Ann, we look at all payoffs for Ann (!) for Ann's different options.

We choose the maximum of these payoffs for each such move combination. Then we

select that move combination with the minimum such value. This is called the

punishment value for Ann.

Remember that in a two-player game, the maximin value for Ann is obtained by

looking on Ann's payoffs in the rows, selecting the lowest such value in each

row, and then selecting the row with the maximum such minimum. In order to obtain the

punishment value for Ann, we also look only on Ann's payoffs, but we start with

the columns. In each such column we fix the maximum payoff in each column,

and after that we select the column with the minimum such number.

The role of rows and columns is reversed, and the

ordering of Min and Max is reversed; for this reason the punishment value is

sometimes also called the minimax value.

For example, in the

ADVERTISING game,

the maximin value and the punishment value are both 3. A can

guarantee a payoff of 3 by advertising, but Beth can guarantee that A gets no more than

3 by advertising.

In the

BATTLE OF THE SEXES example,

Adam's maximin move is to go to soccer, guaranteeing the maximin value of 1 to Adam,

whereas the punishment move for Beth is to go to the ballet, restricting

Adam's payoff to 2 or less. Therefore the punishment value for Adam is 2.

Theorem:

The punishment value for a player is always larger or equal to the

maximin value of the same player.

If we allow all mixed strategies,

we even get equality in case of only two players:

Minimax Theorem (von Neumann, 1928):

For every finite two-person game,

the maximin value for Ann, if allowing all mixed strategies, equals the

punishment value for Ann (and the same for Beth, of course).

However, this is not true for three or more players.

Give an example where it is not true.

The next result is fundamental for finding Nash equilibria in mixed strategies,

as we will see later in Section 3.

Indifference Theorem:

A mixed strategy of Ann is a best response to some (mixed) strategy

of Beth if each pure strategy occuring with nonempty probability in Ann's

mixed strategy is also a best response to Beth's strategy.

Otherwise we would increase

the proportion of Ann's best response pure strategy and get an improvement.

For two-person zero-sum games, there follows the somewhat strange fact that

in some situations, if your opponent is supposed to mix all pure strategies,

then it doesn't even matter whether she mixes, or which pure strategy she uses,

as long as you stick to your mixed strategy. This happens in ROCK-SCISSORS-PAPER:

If you use all three possibilities with probability 1/3, then anything your opponent

does gives an expected outcome of 1/2 for each. However, in more complex situations

still "most" mixed strategies are inferior against a mixed strategy that is part of a Nash equilibrium.

Examples are VNM POKER(4,4,3,5), for instance,

and also Morra.

2. Mixed Nash Equilibria

A Nash equilibrium of mixed strategies is called a mixed Nash equilibrium.

2.1 Two-player Zero-Sum Games

These special games have two nice features:

von Neumann's Theorem [1928]:

Every finite two-person zero-sum game has at least one Nash equilibrium in

mixed strategies. They are the maximin mixed strategies.

Actually von Neumann's proved a more general result, his famous "Minimax Theorem",

from which the theorem above follows easily.

Such a Nash equilibrium can also be obtained by Brown's fictitious play process

described in the subsection above:

Theorem (Julia Robinson) [Robinson 1951]:

If two players play a zero-sum game in

normal form repeatedly, and if in each round each player chooses the best response pure

strategy against the observed mixed strategy of the total history of the other player,

then the mixed strategies of the whole history converge to a pair of mixed strategies

forming a Nash equilibrium.

Example: ELECTION(3,3,5) and also ELECTION(2,3,5) or ELECTION(2,2,5)

2.2 Non Zero-Sum Games or More Players

Things become more complicated in these cases. Let me illustrate this with the following

two examples, one game is zero-sum, the other isn't:

| Game 1: | Left | Right |

| Up | 0, 0 | 10, -10 |

| Down | 5, -5 | 0, 0 |

| |

| Game 2: | Left | Right |

| Up | 0, 0 | 10, 5 |

| Down | 5, 10 | 0, 0 |

|

Note however that the payoffs for Ann (but not for Beth) are identical

in the corresponding situations.

Let's start with the zero-sum Game 1:

Ann's optimal mixed strategy is to choose "Up" with probability 1/3

(and therefore "Down" with probability 2/3),

and Beth best chooses "Left" with probability 2/3. The expected payoff

for Ann in this mixed Nash equilibrium is 10/3 for Ann, and therefore -10/3 for Beth.

Therefore this strategy is the maximin strategy for Ann if mixed strategies are allowed.

But since the maximin strategy and

value only depend on Ann's payoffs, and these don't change between Game 1 and Game 2,

the strategy with 1/3 "Up" and 2/3 "Down" is also the maximin strategy for Ann in Game 2

(with mixed strategies allowed). Since Game 2 is symmetric, Beth has a similar

maximin strategy of choosing 1/3 "Left" and 2/3 "Right". The expected payoff for both

when both play this strategy is 10/3. But this is not a Nash equilibrium in Game 2---every

deviation of Ann in the direction of more "Up" is rewarded, as is every deviation of

Beth in the direction of more "Left". Actually we will show below that Game 2, if mixed strategies

are allowed, has three mixed

Nash equilibria:

- In the first, Ann chooses "Up" with probability 2/3 and Beth chooses "Left" with

probability 2/3, having the same expected payoff of 10/3 for both, but no reason to

deviate.

- In the second one Ann chooses "Up" and Beth chooses "Right". Payoffs are 10 respectively 5.

- The last Nash equilibrium is where Ann chooses "Down" and Beth "Left".

Payoffs are 5 and 10.

BAUSTELLE

Although the maximin strategies do not necessarily form a mixed Nash equilibrium for general games,

mixed Nash equilibriua always exist, even for non-zero-sum games with an arbitrary number of players:

Nash's Theorem (1950): Every finite game has at least one

Nash equilibrium in pure or mixed strategies.

The mathematician John Forbes Nash Jr. (*1928) obtained this result in his Ph.D. dissertation

He was awarded the 1994

Nobel Prize in Economics (shared with Reinhard Selten and John Harsanyi)

for this result and some other papers written around 1950-1954. Mathematically

more challenging were his

results about questions in differential geometry

(Every Riemann manifold can be embedded into some Rn)

and the theory of partial differential equations

(1957: Theorem of de Giorgi and Nash, answering one of Hilbert's problems).

In the sixties he was candidate for the Fields medal, the Mathematics equivalent

of the Nobel Prize. According to John Milnor,

"Nash's prize work is an ingenious but not surprising

application of well-known methods, while his subsequent mathematical work

is far more rich and important" [Milnor 1998].

Nash was suffering from paranoid schizophrenia for a long time.

von Neumann's achievement in Game Theory was to put the topic on the agenda

and of course, his famous Minimax Theorem. However, von Neumann and Morgenstern's

monograph concentrate very much on two-person zero-sum games, which really don't occur

that often outside of literal games. Nash's achievement in Game Theory was to clarify the

distinction between cooperative and non-cooperative games, to shift emphasis from two-person

zero-sum games to general non-cooperative games, and to show that every such game has a

(Nash-) equilibrium in mixed strategies. Furthermore, he introduced four axioms for

bargaining that guarantee a unique solution.

3. Computing Mixed Nash equilibria

It is relatively easy to check whether a bunch of mixed strategies, one for every player, forms a

Nash equilibrium:

According to the Indifference Theorem,

all we have to do is to find for every player

all pure strategy best responses to the mixed strategies of the others,

and check whether all pure strategies in the support of that player belong to them.

But the question is how to find them. And this is in general not easy, even when the normal

form is given. Without that, if the game is described by its extensive form,

even calculating the normal form may be

not feasible, since it may be far too large. An example of such a game is

DMA Soccer II.

In the following we assume that the normal form is given.

What should be done first is to eliminate all strictly dominated pure strategies from the normal form:

Fact: A strictly dominated pure strategy never occurs in the support of a

Nash equilibrium mixed strategy, but a weakly dominated strategy may.

As mentioned above, for the special two-player zero-sum games, the problem to find Nash equilibria

in mixed strategies can be formulated as a Linear Program.

And for such problems, George Dantzig developed around 1947

a solution method, the so-called "Simplex Algorithm." This method is in practice quite fast,

although there are a few artificial cases where the algorithms needs long. But later, other algorithms have

been found that always find a solution in time bounded by a polynomial in the number of rows and columns.

For general games, if the numbers of rows and columns are small, the Indifference Theorem

can be used. Then it suffices to solve a few systems of linear equations to obtain all mixed Nash equilibria.

We will describe details below for two-player games and bimatrices of sizes 2 × 2, 2 × n, 3 × 3,

and also for three players with two options each.

This method gets infeasible for large number of options. Although in a 2 player game where the normal form

has 10 rows and columns, we need to solve systems with up to 10 equations and 10 variables,

which is not too difficult using technology, the number of such systems we need to solve raises

already to more than 1,000,000. More sophisticated approaches are needed.

For 2-player games, the best approach so far is an algorithm found by Lemke and

Howson. By the way, they also showed that under that conditions the number of Nash equilibria must be odd

---except in degenerate cases. Still, this algorithm has exponential running time in the

worst case [Lemke/Howson 1964].

Finally Brown's fictitious play can be used to get approximate values of mixed Nash equilibria.

However, the method does not always work. Moreover convergence may be slow---even after

say 50000 iteration you may not be within say 1% of the probabilities.

And if there are more than one mixed Nash equilibria,

it may be that Brown's process converges only to one of them in most cases.

Here is an applet

where you can compute mixed Nash equilibria in zero-sum games.

For non-zero-sum games you can use this one.

In the following we will discuss methods for several small cases.

3.1 Small 2-player zero-sum games

2 × n zero-sum games

If Ann mixes between two choices,

the probability p for her first move obviously implies that

the probability for her second move must be 1-p. For such a mix,

Ann's payoff provided Beth plays some of her pure strategies is a linear function in p, with

the cases p=0 and p=1 being the payoffs in the pure cases.

So for Beth's ith move we draw the straight line from (0,A1,i) to (1,A2,i).

We then draw the curve always following the lowest of these lines. The largest value of this curve

is the security level for Ann, and the corresponding p-value belongs to

Ann's Nash equilibrium mix.

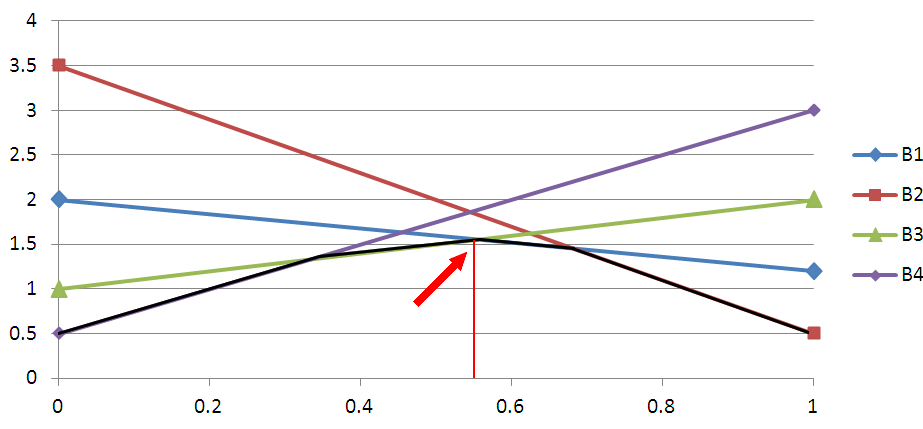

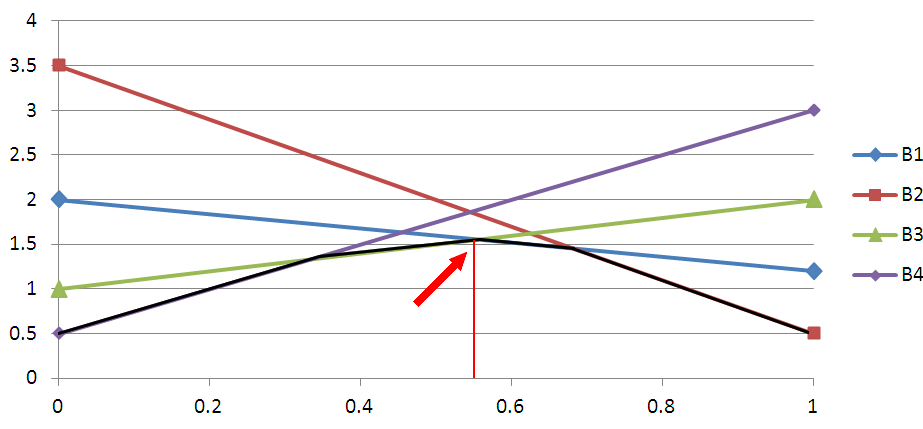

Look at the following example:

Look at the following example:

| B1 | B2 | B3 | B4 |

| A1 | 2 | 3.5 | 1 | 0.5 |

| A2 | 1.25 | 0.5 | 2 | 3 |

The Nash equilibrium p is about 0.55, and Ann's guaranteed payoff for this mix is a little more than 1.5.

Since the resulting point lies at the intersection point of the straight lines belonging to B1 and

B3, Beth will mix between these two pure strategies.

3 × n zero-sum games

A similar approach, but now in 3 dimensions, works for the 3 × n case.

Ann's mix would be p1, p2, p3 with p1+p2+p3=1.

Any such triple of numbers corresponds to a point on a triangle, using barycentric coordinates.

These are calculated as the ratio of shortest distance of the point to one side over the

shortest distance of the third point of the triangle to that same side. Now we build straight lines

perpendicular to the triangle at the three points, and hang a triangle at these pillars

at heights A1,i, A2,i, A3,i for every pure strategy i of Beth.

These triangles are intersecting. The point on the triangle having most "air" above it until it meets the

lowest ceiling is Ann's maximin mix, and the height until this lowest ceiling there is Ann's security level

for mixed strategies.

In the example to the right the triangle and the three pillars are drawn in white.

The matrix is

| B1 | B2 | B3 |

| A1 | 5 | 7 | 4 |

| A2 | 3 | 5 | 6 |

| A2 | 6 | 4 | 5 |

which is just the matrix of CRASH ROCK SCISSORS PAPER discussed above with 5 added to each one of Ann's payoff.

Grab the object and turn it around.

3.2 Small non zero-sum games

3.2.1 The 2×2 Case

The simplest normal forms are for two-players games

where each player has exactly two strategies. That is, we are facing

a payoff matrix of the form

| Left | Right |

| Up | A1,1, B1,1 | A1,2, B1,2 |

| Down | A2,1, B2,1 | A2,2, B2,2 |

There are three cases of mixed Nash equilibria: One where Ann mixes and Beth doesn't,

one where Beth mixes and Ann doesn't, and one where both mix.

Let us look at this third case first.

Assume that Ann chooses "Up" with probability p, 0 < p < 1 and "Down" with probability 1-p.

In the same way, Beth chooses "Left" with probability q, 0 < q < 1 and "Right" with probability 1-q.

The Indifference Theorem above implies that Ann

faces the same expected payoffs when playing Up or Down provided Beth keeps mixing.

These expected payoffs are q·A1,1+(1-q)·A1,2

and q·A2,1+(1-q)·A2,2. Therefore

q·A1,1+(1-q)·A1,2 =

q·A2,1+(1-q)·A2,2

Similar, in a Nash equilibrium Beth must face the same payoffs with both strategies

when facing Ann mixing as described above. Therefore

p·B1,1+(1-p)·B2,1 =

p·B1,2+(1-p)·B2,2

We have two linear equations with two variables p and q. It is even simpler than the general case,

since p does not occur in the first equation and q not in the second.

They are not interrelated, and can therefore be solved separately. For the first we get

q·(A1,1-A1,2)+A1,2 =

q·(A2,1-A2,2)+A2,2

or

q = (A2,2-A1,2)/A1,1+A2,2-A1,2-A2,1)

In the same way, the second equation can be solved for p as

p = (B2,2-B2,1)/(B1,1+B2,2-B2,1-B1,2).

Of course both these values have to be between 0 and 1 in order to get a mixed Nash equilibrium.

The other two cases are easy to analyze: Assume Ann mixes, plays "Up" with probability p

and "Down" with probability 1-p, but that Beth plays the pure strategy "Left".

Then the Indifference Theorem implies that A1,1=A2,1.

Then both "Up" versus "Left" as well as "Down" versus "Left" are pure Nash equilibria,

and every value of p between 0 and 1 would produce a mixed strategy for Ann that would

form a Nash equilibrium with "Left". Therefore we would have infinitely

many mixed Nash equilibria, with two pure ones as extreme cases. The other cases are

similar. So ordinarily we would have at most one mixed Nash equilibrium, with both

Ann and Beth really mixing, or we would have infinitely many of them.

The complete analysis of the 2 × 2 case --- domination, best response,

all pure and mixed Nash equilibria --- can be done with the Excel sheet Nash.xls

on the sheet "Nash22".

3.2.2 The 3×3 Case

The general payoff bimatrix in this case is:

| Left | Middle | Right |

| Up | A1,1, B1,1 | A1,2, B1,2 | A1,3, B1,3 |

| Middle | A2,1, B2,1 | A2,2, B2,2 | A2,3, B2,3 |

| Down | A3,1, B3,1 | A3,2, B3,2 | A3,3, B3,3 |

We have one case where Ann mixes between all three strategies,

three cases where Ann mixes just between two of them, and

three cases where Ann uses a pure strategy, and the same holds for Beth.

Thus we can in principle pair any of Ann's seven cases

with any of Beth's seven cases to get 49 possible patterns for Nash equilibria.

Let us start with the most interesting pattern where both Ann and Beth mix between

all their three strategies. Assume that Ann chooses "Up" with probability p1,

"Middle" with probability p2,

and "Down" with probability 1-p1-p2.

In the same way, Beth chooses "Left" with probability q1, "Middle" with probability q2,

and "Right" with probability 1-q1-q2.

The Indifference Theorem above

gives us two double equations, namely

q1A1,1+q2A1,2+(1-q1-q2)A1,3 =

q1A2,1+q2A2,2+(1-q1-q2)A2,3 =

q1A3,1+q2A3,2+(1-q1-q2)A3,3

p1B1,1+p2B2,1+(1-p1-p2)B3,1 =

p1B1,2+p2B2,2+(1-p1-p2)B3,2 =

p1B1,3+p2B2,3+(1-p1-p2)B3,3

Each of these double equations can be broken into two equations.

So what we really have is a system of four linear equations in the

four variables p1, p2, q1, and q2.

q1A1,1+q2A1,2+(1-q1-q2)A1,3 =

q1A2,1+q2A2,2+(1-q1-q2)A2,3

q1A1,1+q2A1,2+(1-q1-q2)A1,3 =

q1A3,1+q2A3,2+(1-q1-q2)A3,3

p1B1,1+p2B2,1+(1-p1-p2)B3,1 =

p1B1,2+p2B2,2+(1-p1-p2)B3,2

p1B1,1+p2B2,1+(1-p1-p2)B3,1 =

p1B1,3+p2B2,3+(1-p1-p2)B3,3

q1 and q2 occur in the first and the second equation, and

p1 and p2 in the third and the fourth, so

the first two equations can be solved separately from the last two.

We therefore can express q1 and q2 in fairly complicated expressions by

the coefficients A1,1, ... A3,3,

and p1 and p2 in a similar way by B1,1, ... B3,3.

In general we get one solution.

The next case is where Ann mixes between two strategies,

say between "Up" and "Middle" with probabilities p and 1-p, and Beth between all three as before.

We get three equations with three variables

q1A1,1+q2A1,2+(1-q1-q2)A1,3 =

q1A2,1+q2A2,2+(1-q1-q2)A2,3

pB1,1+(1-p)B2,1 =

pB1,2+(1-p)B2,2

pB1,1+(1-p)B2,1 =

pB1,3+(1-p)B2,3

If both Ann and Beth mix between two strategies, essentially the formulas for the

2×2 case can be used.

In the same way as above, if one player mixes and the other plays a pure strategy

in a Nash equilibrium, some of the payoff coefficients of the matrix,

in a row or a column, are identical, and the mixing player could use any mix between the strategies involved

to get a Nash equilibrium.

3.2.3 The 2×2×2 Case with 3 Players

The Indifference Theorem above

is also the key for finding all Nash equilibria in mixed strategies

in three-players games with two pure strategies each, but we will see that and why this case

is much more complicated than the previous two cases. Being more complicated than the

two-players 2×2 case is probably not surprising, but why is it more complicated than the

two-players 3×3 case, which has 18 parameters? Our case considered has 24 parameters.

..................

|

Cindy chooses 1 |

| |

Beth chooses 1 |

Beth chooses 2 |

| Ann chooses 1 |

A1,1,1,B1,1,1,C1,1,1 |

A1,2,1,B1,2,1,C1,2,1 |

| Ann chooses 2 |

A2,1,1,B2,1,1,C2,1,1 |

A2,2,1,B2,2,1,C2,2,1 |

|

|

Cindy chooses 2 |

| |

Beth chooses 1 |

Beth chooses 2 |

| Ann chooses 1 |

A1,1,2,B1,1,2,C1,1,2 |

A1,2,2,B1,2,2,C1,2,2 |

| Ann chooses 2 |

A2,1,2,B2,1,2,C2,1,2 |

A2,2,2,B2,2,2,C2,2,2 |

|

4. Sequentialization II

..........

ROCK-SCISSORS-PAPER has an obvious second mover advantage.

The following is a another game with second mover advantage. There are no pure Nash equilibria

| Left | Right |

| Up | 1,4 | 3,2 |

| Down | 4,1 | 2,3 |

And here is a game, without pure Nash equilibria, where Ann has a first mover

advantage and Beth a second mover advantage.

| Left | Right |

| Up | 1,3 | 4,1 |

| Down | 2,2 | 3,4 |

References

- G.W. Brown, Iterative Solutions of Games by Fictitious Play, Activity Analysis

of Production and Allocation, Cowles Comission for Research in

Economics, Monograph No. 13, John Wiley & Sons (1951)

- J. Robinson, An Iterative Method for Solving a Game, Annals of Mathematics 54 (1951) 296-301.

- Morton D. Davis, Game Theory: A Nontechnical Introduction,

Dover Publications, 1997; in our Library 519.3 D29g

- An Applet for solving arbitrary games:

http://banach.lse.ac.uk/form.html

- An Applet for solving zero-sum games:

http://levine.sscnet.ucla.edu/Games/zerosum.htm

- Carlton E Lemke, J. T. Howson, Jr., Equilibrium Points

of Bimatrix Games, SIAM Journal of Applied Mathematics 12 (1964) 413-423.

- Bernhard von Stengel, Lecture Notes on Game Theory,

http://www.maths.lse.ac.uk/Courses/MA301/lectnotes.pdf

2007

- Ruchira S. Datta, Using Computer Algebra to find Nash Equilibira,

ISAAC 03, (2003)

Look at the following example:

Look at the following example: